Places are still available on the JPEG 2000 seminar to be held at the Wellcome Trust on 16 November.

Draft programme with timetable and confirmed speakers:

09:00 Registration, coffee

10:00 Welcome, introduction

Christy Henshaw, Wellcome Library, Chair of JP2K-UK

Morning session Chair: William Kilbride, Executive Director, Digital Preservation Coalition

10:10 What did JPEG 2000 ever do for us?

Simon Tanner, Director, Kings Digital Consultancy Service

10:40 JPEG 2000 standardization - a pragmatic viewpoint

Richard Clark, UK head of delegation to JPEG and MD of Elysium Ltd.

11:10 JPEG 2000 profiles

Five ten-minute presentations moderated by Sean Martin, Head of Architecture and Development, British Library

12:10 IIPImage and OldMapsOnline

Petr Zabicka, Head of R&D, Moravian Library, Czech Republic

12:40 LUNCH

Early afternoon session Chair: Dave Thompson, Digital Curator, Wellcome Library

13:40 JP2K for preservation and access, experiences from the National Library of Norway

Svein Arne Brygfjeld, National Library of Norway

14:10 Web presentation of JPEG 2000 images

Sasa Mutic, Geneza and Ivo Iossiger, 4DigitalBooks, Switzerland

14:40 JPEG 2000 for long-term preservation in practice: problems, challenges and possible solutions

Johan van der Knijff, Koninklijke Bibliotheek (NL)

15:10 Coffee

Late afternoon session Chair: Simon Tanner, Director, Kings Digital Consultancy Service

15:40 Delivering High-Resolution JPEG2000 Images and Documents over the Internet

Gary Hodkinson, MD of Luratech Ltd.

16:10 Pros and Cons of JPEG 2000 for video archiving

Katty van Mele, IntoPIX

16:40 Questions and discussion

Moderated by Ben Gilbert, Photographer, Wellcome Library

17:10 Concluding remarks

October 18, 2010

October 01, 2010

Guest post: Examining losses, a simple Photoshop technique for evaluating lossy-compressed images

Bill Comstock, Head of Imaging Services at Harvard College Library, writes a second post about using Photoshop to evaluate lossy compressed images.

If you decide to employ JPEG2000’s lossy compression scheme, you will also have to determine the degree to which you are willing to compress your files; you’ll have to work to identify that magic spot where you realize a perfect balance between file size reduction and the preservation of image quality.

Of course, there is no magic spot, no perfect answer -- not for any single image, and certainly not for the large batches of images that you will want to process using a single compression recipe. Regardless of whether you decide to control the application of compression by setting the compression ratio, using a software-specific “quality” scale, or by signal-to-noise ratio, you will want to test a variety of settings on a range of images, scrutinize the results, and then decide where to set your software.

Below I describe a Photoshop technique for overlaying an original, uncompressed source image, with a compressed version of the image to measure the difference between the two, and to draw your attention to regions where the compressed version of the image differs most significantly from the source image. Credit for the technique belongs to Bruce Fraser.

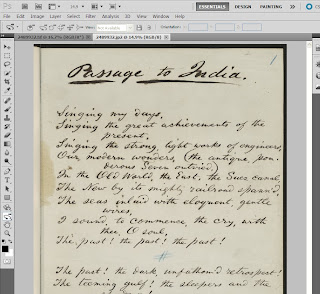

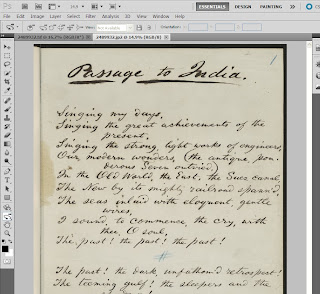

1. First, open up the two images that you want to compare (the original source image, and the compressed JP2 derivative) in Photoshop.

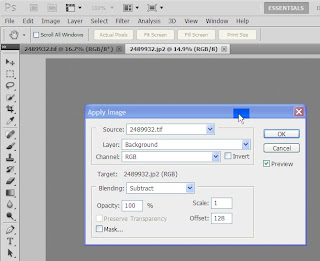

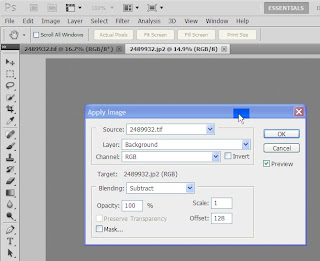

2. Next, go to the “Image” menu and select “Apply Image”.

3. Set Blending to “Subtract”; Scale to “1”; and Offset to “128.“

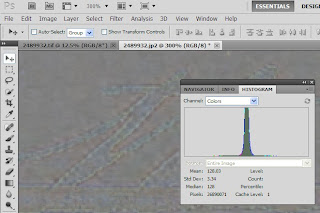

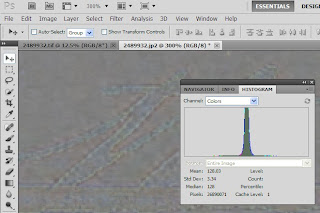

4. The differences between the two images are now visible (you may need to magnify the image beyond 100%), and the standard deviation between the two copies can be displayed on the Histogram panel.

(A standard deviation of zero indicates that the two copies are identical and that the compressed version was losslessly compressed.)

Another option: You can also create a two layer image in PS where one layer is the source image, the second layer is the compressed copy, and by setting the blending option to “difference”. You may find the technique described in detail above preferable, if only because it makes the variance between the two copies more easily visible by shifting the pixel-to-pixel differences into the middle gray region.

Within the group that I manage, we modulate compression using PSNR. We test each candidate setting on a large number of images and then examine some number of the least and most compressed images in the set. We repeat the process until we have zeroed in on what seems to be the best setting.

Good luck!

If you decide to employ JPEG2000’s lossy compression scheme, you will also have to determine the degree to which you are willing to compress your files; you’ll have to work to identify that magic spot where you realize a perfect balance between file size reduction and the preservation of image quality.

Of course, there is no magic spot, no perfect answer -- not for any single image, and certainly not for the large batches of images that you will want to process using a single compression recipe. Regardless of whether you decide to control the application of compression by setting the compression ratio, using a software-specific “quality” scale, or by signal-to-noise ratio, you will want to test a variety of settings on a range of images, scrutinize the results, and then decide where to set your software.

Below I describe a Photoshop technique for overlaying an original, uncompressed source image, with a compressed version of the image to measure the difference between the two, and to draw your attention to regions where the compressed version of the image differs most significantly from the source image. Credit for the technique belongs to Bruce Fraser.

1. First, open up the two images that you want to compare (the original source image, and the compressed JP2 derivative) in Photoshop.

2. Next, go to the “Image” menu and select “Apply Image”.

3. Set Blending to “Subtract”; Scale to “1”; and Offset to “128.“

4. The differences between the two images are now visible (you may need to magnify the image beyond 100%), and the standard deviation between the two copies can be displayed on the Histogram panel.

(A standard deviation of zero indicates that the two copies are identical and that the compressed version was losslessly compressed.)

Another option: You can also create a two layer image in PS where one layer is the source image, the second layer is the compressed copy, and by setting the blending option to “difference”. You may find the technique described in detail above preferable, if only because it makes the variance between the two copies more easily visible by shifting the pixel-to-pixel differences into the middle gray region.

Within the group that I manage, we modulate compression using PSNR. We test each candidate setting on a large number of images and then examine some number of the least and most compressed images in the set. We repeat the process until we have zeroed in on what seems to be the best setting.

Good luck!

Subscribe to:

Posts (Atom)