Thanks for provocative blogs

Thanks to Johan van der Knijff and Dave Thompson for the helpful blog postings here that frame some important questions about the sustainability of the JPEG 2000 format. Caroline Arms and I were flattered to see that our list of format-assessment factors was cited, along with the criteria developed at the UK National Archives. We certainly agree that many of these factors have a theoretical turn and that judgments about sustainability must be leavened by actual experience.

We also call attention to the importance of what we call Quality and Functionality factors (hereafter Q&F factors). It is possible that some formats will "score" high enough on these factors as to outweigh perceived shortcomings on the Sustainability Factor front.

As I drafted this response, I benefited from comments from Caroline and Michael Stelmach, the Library of Congress staffer who chairs the Federal Agencies Still Image Digitization Guidelines Working Group.

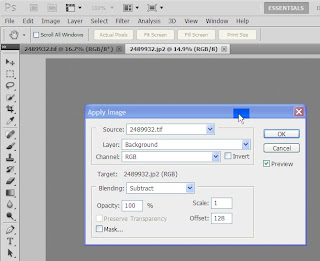

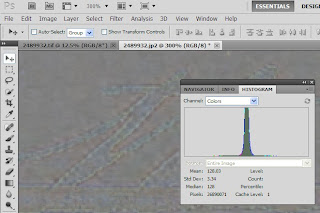

Colorspace (as it relates to the LoC's Q&F factor Color Maintenance)

We agree that the JPEG 2000 specification would be improved by the ability to use and declare a wider array of color spaces and/or ICC profile categories. We join you in endorsing Rob Buckley's valuable work on a JP2 extension to accomplish that outcome.

When Michael and I were chatting about this topic, he said that he been doing some informal evaluations of the spectra represented in printed matter at the Library of Congress. This is an informal investigation (so far) and his comment was off the cuff, but he said he had been surprised to see that the colors he had identified in a wide array of original items could indeed be represented within the sRGB color gamut, one of the enumerated color spaces in part 1 of the JPEG 2000 standard.

Michael added that he knew that some practitioners favor scRGB - not included in the JPEG 2000 enumerated list - either because of scRGB's increased gamut and/or perhaps because it allows for linear-to-intensity representations of brightness rather than only gamma-corrected representations. The extended gamut - compared to sRGB - will be especially valuable when reproducing items like works of fine art. And we agree with Johan van der Knijff's statement that there will be times when we will wish to go beyond input-class ICC profiles and embrace 'working' color spaces. All the more reason to support Rob Buckley's effort.

Adoption (the LoC Sustainability criteria includes adoption as a factor)

This is an area in which we all have mixed feelings: there is adoption of JPEG 2000 in some application areas but we wish there were more. Caroline pointed to one positive indicator: many practitioners who preserve and present high-pixel-count images like scanned maps, have embraced JPEG 2000 in part because of its support for efficient panning and zooming. The online presentation of maps at the Library of Congress is one good example (for a given map you see an 'old' JPEG in the browser, generated from JPEG 2000 data under the covers).

Caroline adds that the geospatial community uses JPEG 2000 as a standard (publicly documented, non-proprietary) alternative to the proprietary MrSID. Both formats continue to be used. LizardTech tools now support both equally. Meanwhile, GeoTIFF is used a lot too. Caroline notes that LizardTech re-introduced a free stand-alone viewer for JPEG2000/MrSID images last year in response to customer demand. And a new service for solar physics from NASA, Helioviewer, is based on JPEG2000. NASA includes a justification for using the format on their website.

For my part, I can report encountering some JPEG 2000 uptake in moving image circles, ranging from its use in the digital cinema's 'package' specification (see a slightly out of date summary) to its inclusion in Front Porch Digital's SAMMA device, used to reformat videotapes in a number of archives, including the Library of Congress.

Meanwhile, Michael recalled seeing papers that explored the use of JPEG 2000 compression in medical imaging (where JPEG 2000 is an option in the DICOM standard), with findings that indicated that diagnoses were just as successful in JPEG 2000 compressed images as they were when radiologists consulted uncompressed images. An online search using a set of terms like "JPEG2000, medical imaging, radiology" will turn up a number of relevant articles on this topic, including Juan Paz et al, 2009, "Impact of JPEG 2000 compression on lesion detection in MR imaging," in Medical Physics, which provides evidence to this effect.

On the other hand - negative indicators, I guess - we have the example of non-adoption by professional still photographers. On the creation-and-archiving side, their fondness for retaining sensor data motivates them to retain raw files or to wrap that raw data in DNG. I was curious about the delivery side, and looked at the useful dpBestFlow website and book, finding that the author-photographer Richard Anderson reports that he and his professional brethren deliver the following to their customers: RGB or CMYK files (I assume in TIFF or one of the pre-press PDF wrappers), "camera JPEGs" (old style), "camera TIFFs," or DNGs or raw files. There is no question that the lack of uptake of JPEG 2000 by professional photographers hampers the broader adoption of JPEG 2000.

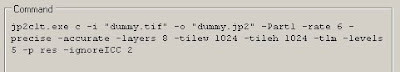

Software tools (their existence is part of the Sustainability Factor of Adoption; their misbehavior is, um, misbehavior)

It was very instructive to see Johan van der Knijff's report on his experiments with LuraTech, Kakadu, PhotoShop, and ImageMagick. If he is correct, these packages do misbehave a bit and we should all encourage the manufacturers to fix what is broken. There is of course a dynamic between the application developers and adoption by their customers. If there is not greater uptake in realms like professional photography, will the software developers like Adobe take the time to fix things or even continue to support the JPEG 2000 side of their products?

Caroline, Michael, and I pondered Johan van der Knijff's suggestion that "the best way to ensure sustainability of JPEG 2000 and the JP2 format would be to invest in a truly open JP2 software library." We found ourselves of two minds about this. On the one hand, such a thing would be very helpful but, on the other, building such a package is definitely a non-trivial exercise. What level of functionality would be desired? The more we want, the more difficult to build. Johan van der Knijff's comments about JasPer remind us that some open source packages never receive enough labor to produce a product that rivals commercial software in terms of reliability, robustness, and functional richness. Would we be happy with a play-only application, to let us read the files we created years earlier with commercial packages that, by that future time, are defunct? In effect such an application would be the front end of a format-migration tool, restoring the raster data so that it can be re-encoded into our new preferred format. As we thought about this, we wondered if people would come forward to continue to update the software for new programming languages and operating systems, to keep them in operation to ensure that they are still working.

As a sidebar, Johan van der Knijff summarizes David Rosenthal's argument that "preserving the specifications of a file format doesn’t contribute anything to practical digital preservation" and "the availability of working open-source rendering software is much more important." We would like to assert that you gotta have 'em both: it would be no good to have the software and not the spec to back it up.

Error resilience

Preamble to this point: In drafting this, I puzzled over the fit of error resilience to our Sustainability and Quality/Functionality factors. In our description of JPEG 2000 core coding we mention error resilience in the Q&F slot Beyond Normal. But this might not be the best place for it. Caroline points out that error resilience applies beyond images and she notes that it may conflict with transparency (one of our Sustainability Factors). We find ourselves wishing for a bit of discussion of this sub-topic. Should error resilience be added as a Sustainability Factor, or expressed within one of the existing factors? Meanwhile, how important is transparency as a factor?

Here's the point in the case of JPEG 2000: Johan van der Knijff's blog does not comment on the error resilience elements in the JPEG 2000 specification. These are summarized in annex J, section 7, of the specification (pages 167-68 in the 2004 version), where the need for error resilience is associated with the "delivery of image data over different types of communication channels." We have heard varying opinions about the potential impact of these elements on long term preservation but tend to feel, "it can't be bad."

Here are a few of the elements, as outlined in annex J.7:

- The entropy coding of the quantized coefficients is done within code-blocks. Since the encoding and decoding of the code-blocks are independent, bit errors in the bit stream of a code-block will be contained within that code-block.

- Termination of the arithmetic coder is allowed after every coding pass. Also, the contexts may be reset after each coding pass. This allows the arithmetic coder to continue to decode coding passes after errors.

- The optional arithmetic coding bypass style puts raw bits into the bit stream without arithmetic coding. This prevents the types of error propagation to which variable length coding is susceptible.

- Short packets are achieved by moving the packet headers to the PPM (Packed Packet headers, Main header marker) or PPT (Packed packet header, Tile-part header marker) segments. If there are errors, the packet headers in the PPM or PPT marker segments can still be associated with the correct packet by using the sequence number in the SOP (Start of Packet marker).

- A segmentation symbol is a special symbol. The correct decoding of this symbol confirms the correctness of the decoding of this bit-plane which allows error detection.

- A packet with a resynchronization marker SOP allows spatial partitioning and resynchronization. This is placed in front of every packet in a tile with a sequence number stating at zero. It is incremented with each packet.

Thanks to the Wellcome Library for helping all of us focus on this important topic. We look forward to a continuing conversation.